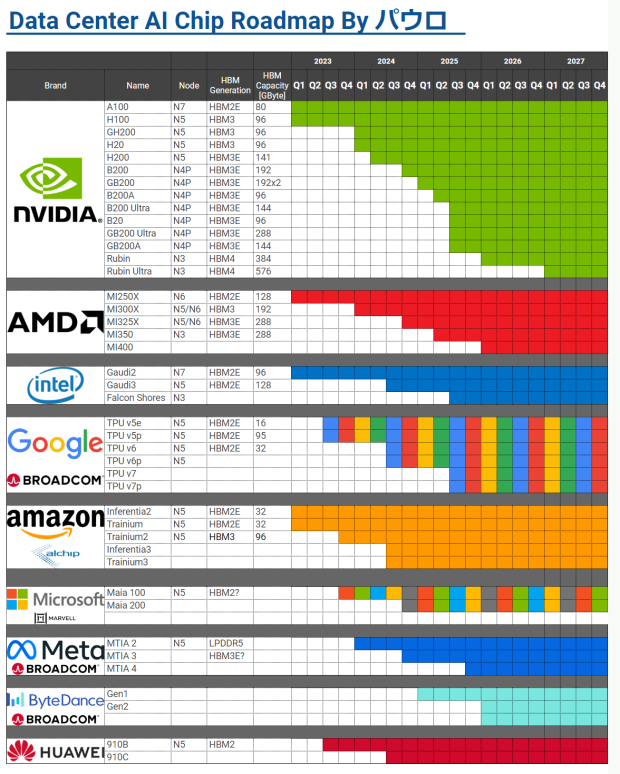

In a recently shared data center AI chip roadmap posted on X, we get a good look at what companies have on the market already, and what’s in the AI chip pipeline through to 2027. Check it out:

VIEW GALLERY – 2 IMAGES

The list includes chip makers NVIDIA, AMD, Intel, Google, Amazon, Microsoft, Meta, ByteDance, and Huawei. You can see the list of NVIDIA AI GPUs includes the Ampere A100 through to the Hopper H100, GH200, H200 AI GPUs, and into the Blackwell B200A, B200 Ultra, GB200 Ultra and GB200A. But after that — which we all know is coming — is Rubin and Rubin Ultra, both rocking next-gen HBM4 memory.

We also have AMD’s growing line of Instinct MI series AI accelerators, with the MI250X through to the new MI350 and the upcoming MI400 listed in there for 2026 and beyond.

Google is a close third with its TPU series of processors, with the TPU v5e through to the next-gen TPU v7p coming in 2025 listed. Intel has its Gaudi 2 and Gaudi 3 on the list, with its next-gen Falcon Shores AI processor not expected until 2H 2025.

We’re expecting NVIDIA’s next-generation Rubin R100 AI GPUs to use a 4x reticle design (compared to Blackwell with 3.3x reticle design) and made on TSMC’s bleeding-edge CoWoS-L packaging technology on the new N3 process node. TSMC recently talked about up to 5.5x reticle size chips arriving in 2026, featuring a 100 x 100mm substrate that would handle 12 HBM sites, versus 8 HBM sites on current-gen 80 x 80mm packages.

TSMC will be shifting to a new SoIC design that will allow larger than 8x reticle size on a bigger 120 x 120mm package configuration, but as Wccftech points out, these are still being planned, so we can probably expect somewhere around the 4x reticle size for Rubin R100 AI GPUs.